The Exponential Family: The Unifying Thread Behind Generalised Linear Models

Imagine walking into a grand library where every statistical distribution sits neatly on a shelf—Normal, Binomial, Poisson, Exponential, and many more. To the untrained eye, each looks distinct, bound in its own mathematical cover. Yet, as you wander deeper, you realise there’s a hidden order—a master catalogue that connects them all under one elegant framework. That invisible structure is the Exponential Family. It’s the librarian of probability, quietly ensuring consistency, simplicity, and harmony in how data behaves and how models understand the world.

This concept lies at the heart of advanced modelling techniques and is one of the first “aha!” moments for anyone delving into probability theory or machine learning. Those studying through a Data Scientist course in Mumbai often encounter this family early on, discovering how its elegant form supports the engines behind regression, classification, and inference.

Contents

A Family With Many Faces

Think of the Exponential Family as a lineage of musical instruments. Each distribution—Normal, Bernoulli, Poisson—plays a unique tune, but all follow the same musical notation. Their sounds differ, yet they share a core rhythm and structure that makes them part of one orchestra.

Mathematically, the Exponential Family unites these distributions through a shared form: an exponential of linear terms involving parameters and sufficient statistics. That phrase may sound complex, but it’s a way of saying that behind every dataset lies a summarised essence—a sufficient statistic—that holds all the information needed to make inferences about a parameter. This structure allows statisticians to perform inference efficiently, just as a musician reads notes instead of memorising each sound.

When students explore this framework during a Data Scientist course in Mumbai, they realise that many algorithms—from logistic regression to softmax classifiers—are built upon these principles. Understanding this connection transforms abstract formulas into a symphony of relationships between data and distribution.

The Secret Ingredient: Sufficient Statistics

In every mystery story, there’s a clue that reveals the whole plot. Sufficient statistics serve this role in the Exponential Family. They condense raw data into a single summary that contains all relevant information for estimating a model’s parameters.

Imagine a detective solving a case using only fingerprints, even if the entire crime scene is chaotic. The fingerprints alone are enough—they’re “sufficient.” Similarly, in statistical inference, sufficient statistics eliminate unnecessary noise and distil information into its purest form.

For example, in a Normal distribution, the mean and variance summarise everything about the dataset that matters for estimating parameters. Once you know these two numbers, the rest of the data points might as well be echoes—they add no new information. This simplicity is why researchers love the Exponential Family—it turns data complexity into elegant summarisation.

Why It Powers Generalized Linear Models

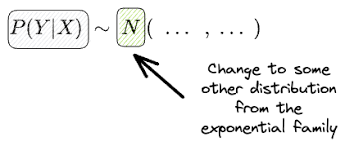

Generalized Linear Models (GLMs) are like the universal adaptors of the modelling world. They extend the humble linear regression to handle counts, probabilities, and even skewed data. The reason GLMs are so powerful is their deep connection to the Exponential Family.

Each GLM corresponds to a member of this family: logistic regression to the Bernoulli, Poisson regression to the Poisson, and Gaussian regression to the Normal. The link function, a mathematical bridge between the expected value of the outcome and the predictors, is derived from the canonical form of the distribution.

In practical terms, this connection allows us to model diverse types of outcomes using a single unifying principle. It’s as if you’ve mastered a language so flexible that you can describe poetry, news, and research all with the same syntax. That’s the elegance of the Exponential Family—it transforms statistical chaos into expressive order.

The Beauty of Conjugacy and Simplicity

Another reason the Exponential Family is so central to modern data science is its friendliness to Bayesian inference. When the prior and likelihood both belong to this family, the posterior distribution often does too—a property called conjugacy. This makes updating beliefs with new data beautifully straightforward.

Think of it as a recipe that always fits neatly back into the same book. No matter how many new ingredients (data points) you add, the recipe adjusts itself without becoming messy or incompatible. This property allows Bayesian models to scale gracefully, keeping computation efficient and inference interpretable.

Such simplicity becomes invaluable in real-world applications—be it predicting stock movements, analysing customer churn, or optimising delivery networks. That’s why mastering these ideas isn’t just theoretical—it’s a skill that separates proficient analysts from exceptional ones.

Beyond Equations: The Philosophy of Structure

At its core, the Exponential Family isn’t just a set of formulas; it represents a philosophy of structure in uncertainty. It tells us that randomness isn’t chaotic—it follows patterns, relationships, and symmetries that can be expressed elegantly. Understanding it changes how we see data. Instead of scattered dots on a graph, we begin to perceive relationships that weave these dots into coherent shapes.

This mindset shift is what every aspiring data professional experiences on their learning journey. By seeing how the Exponential Family underpins statistical theory, one realises that mathematical models are not about control—they’re about harmony with uncertainty.

Conclusion

The Exponential Family is the quiet backbone of modern statistics—a framework that harmonises variety into unity. It enables us to compress data efficiently, connect models seamlessly, and interpret uncertainty meaningfully. For learners stepping into the analytical world, appreciating this family is like understanding the grammar of data’s universal language.

It’s no surprise that top institutes emphasise it early in their curriculum. Because once you understand how the Exponential Family ties together everything from linear models to neural networks, you stop memorising formulas—and start honestly thinking like a data scientist.